A New Era of AI Regulation

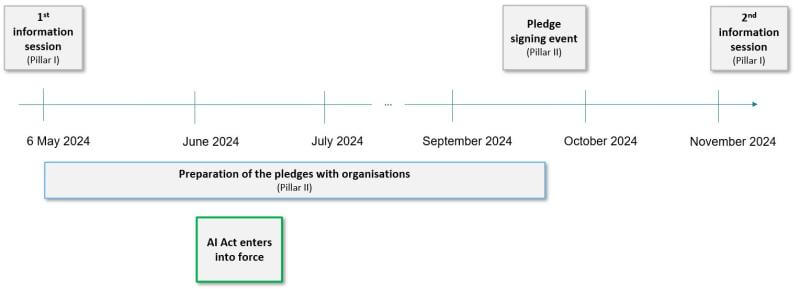

The AI Act, which officially entered into force on August 1, 2024, aims to create a harmonized internal market for AI across the EU.

Most AI systems, such as spam filters and AI-enabled recommendation systems, pose minimal risk to the general public.

It establishes clear guidelines for AI developers and users based on a product safety and risk-based approach that categorizes AI systems according to their potential risks to citizens’ rights and safety:

- Minimal Risk: Most AI systems, such as spam filters and AI-enabled recommendation systems, fall under this category. These systems pose minimal risk to the public and therefore face no obligations under the AI Act, although companies can voluntarily adhere to additional codes of conduct.

- Specific Transparency Risk: AI systems like chatbots and certain AI-generated content, including deep fakes, must clearly inform users when interacting with a machine or when the content is artificially generated. Providers are required to mark synthetic audio, video, text, and images in a machine-readable format to ensure transparency.

- High Risk: AI systems deemed high-risk, such as those used for recruitment, credit scoring, or autonomous robots, are subject to strict regulations. These include risk mitigation measures, high-quality data sets, detailed documentation, human oversight, and stringent cybersecurity standards. Regulatory sandboxes will be established to promote responsible innovation and ensure compliance with these rigorous standards.

- Unacceptable Risk: The AI Act bans AI systems that clearly threaten fundamental rights. This includes AI applications that manipulate human behavior or allow governments or companies to use ‘social scoring’. Specific biometric systems, such as emotion recognition in the workplace and certain forms of predictive policing, are also prohibited.

The AI Act introduces additional rules for general-purpose AI models—highly capable AI systems designed to perform a wide range of tasks. These models will be subject to regulations that ensure transparency along the value chain and address potential systemic risks.

Certain AI-generated content, including deep fakes, must clearly inform users when interacting with a machine

Implementation and Oversight

EU Member States have until August 2, 2025, to designate national competent authorities responsible for enforcing the AI Act and conducting market surveillance activities.

A new AI Office has been established by the European Commission. Within the next months and years it will develop the critical implementation body at the EU level, ensuring the rules are applied consistently across member states.

Three advisory bodies will play a critical role in supporting the implementation of the AI Act. The European Artificial Intelligence Board — which will ensure uniform application of the Act —, a scientific panel of independent experts — which will provide technical advice and monitor potential risks associated with general-purpose AI models and, finally, an advisory forum, composed of a diverse set of stakeholders, — which will offer additional guidance to the AI Office.

Companies failing to comply with the new rules could face severe penalties. Fines could reach up to 7% of global annual turnover for violations involving banned AI applications, 3% for other obligations, and 1.5% for providing incorrect information.

Transition Period and Future Steps

The majority of the AI Act’s rules will take effect on August 2, 2026. However, the prohibitions on AI systems deemed to pose an unacceptable risk will be enforced after just six months, and rules for general-purpose AI models will apply after 12 months.

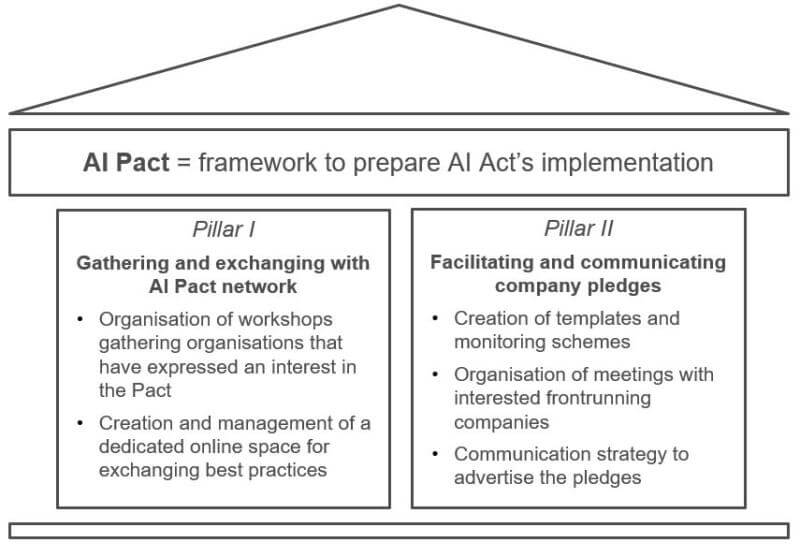

To bridge the transitional period, the European Commission has launched the AI Pact, an initiative encouraging AI developers to voluntarily adopt key obligations of the AI Act ahead of the legal deadlines.

The European Commission is also preparing guidelines to clarify how the AI Act should be implemented, including the development of standards and codes of practice.

A call for expression of interest has been opened to develop the first general-purpose AI Code of Practice, allowing for broad stakeholder engagement.

CEC European Managers’ Position Paper on AI

Amid these regulatory developments, CEC European Managers has taken a proactive stance on AI by publishing a position paper focusing on Artificial Intelligence, leadership, and partnership.

Artificial Intelligence is often not replacing labour, but used for reorganising tasks

CEC European Managers’ position paper on Leadership and partnerships for purposeful AI

This position paper, developed by the Working Group on AI led by President Maxime Legrand, outlines the organisation’s vision for leveraging AI in a way that aligns with ethical standards, promotes responsible leadership, and fosters collaboration across sectors.

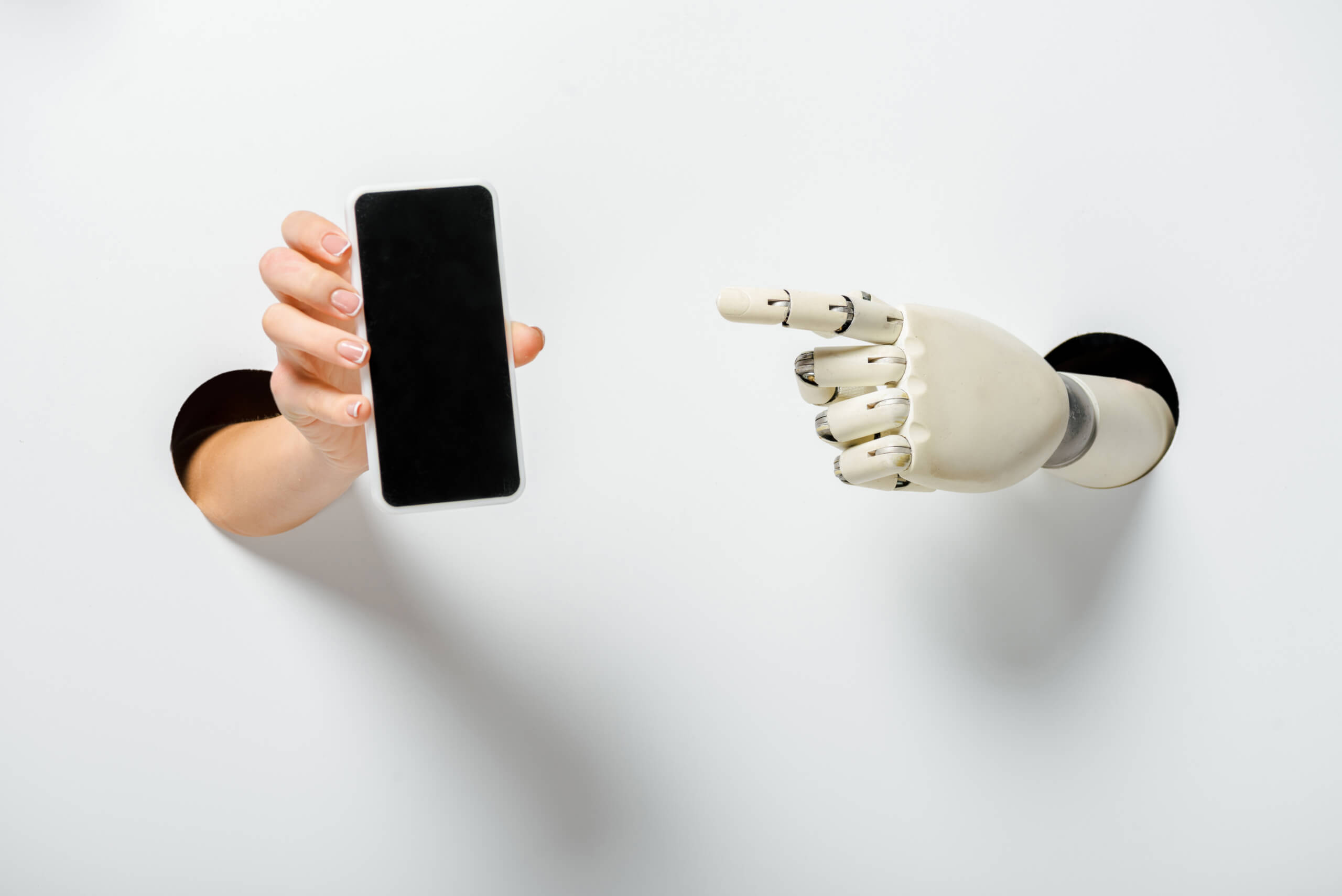

CEC European Managers’ position paper emphasizes the importance of managers and leaders in balancing the implementation of AI tools in the workplace and regulation that protects fundamental rights while enabling innovation, echoing the objectives of the AI Act.

CEC European Managers’ member organisations and sectorial federations have acknowledged that AI is often not replacing labour, but used for reorganising tasks.

This shifts the leadership role on AI to soft skills, context understanding, ethical considerations, systems thinking, futures thinking, team empowerment, and life cycle design within an increasingly complex work and business context.

Leaders are key actors in adapting AI tools to the specific purpose, needs, and competencies of the organisation.

CEC European Managers‘ contribution underscores the need for continuous dialogue and cooperation among policymakers, leaders, and stakeholders. In fact, digitalisation was one of the seven CEC key priorities for the European Elections.

The AI Act represents a significant step in ensuring that AI technologies serve the public good. CEC European Managers‘ engagement highlights the critical role of leadership and partnership in navigating this transformative era.